LISTEN RESEARCH

How AI Moderation Improves Participant Comfort and Honesty

Listen Labs Participants Taught Us About AI vs Human Moderation

Listen Labs Participants Taught Us About AI vs Human Moderation

Listen Labs Participants Taught Us About AI vs Human Moderation

Key Takeaways

Both moderation styles work remarkably well. General comfort is high in both modes and essentially identical: 92% of participants report top comfort levels for human sessions and 92% for AI sessions. This baseline parity reveals something important. The future isn't about replacing one with the other, but understanding when each approach shines.

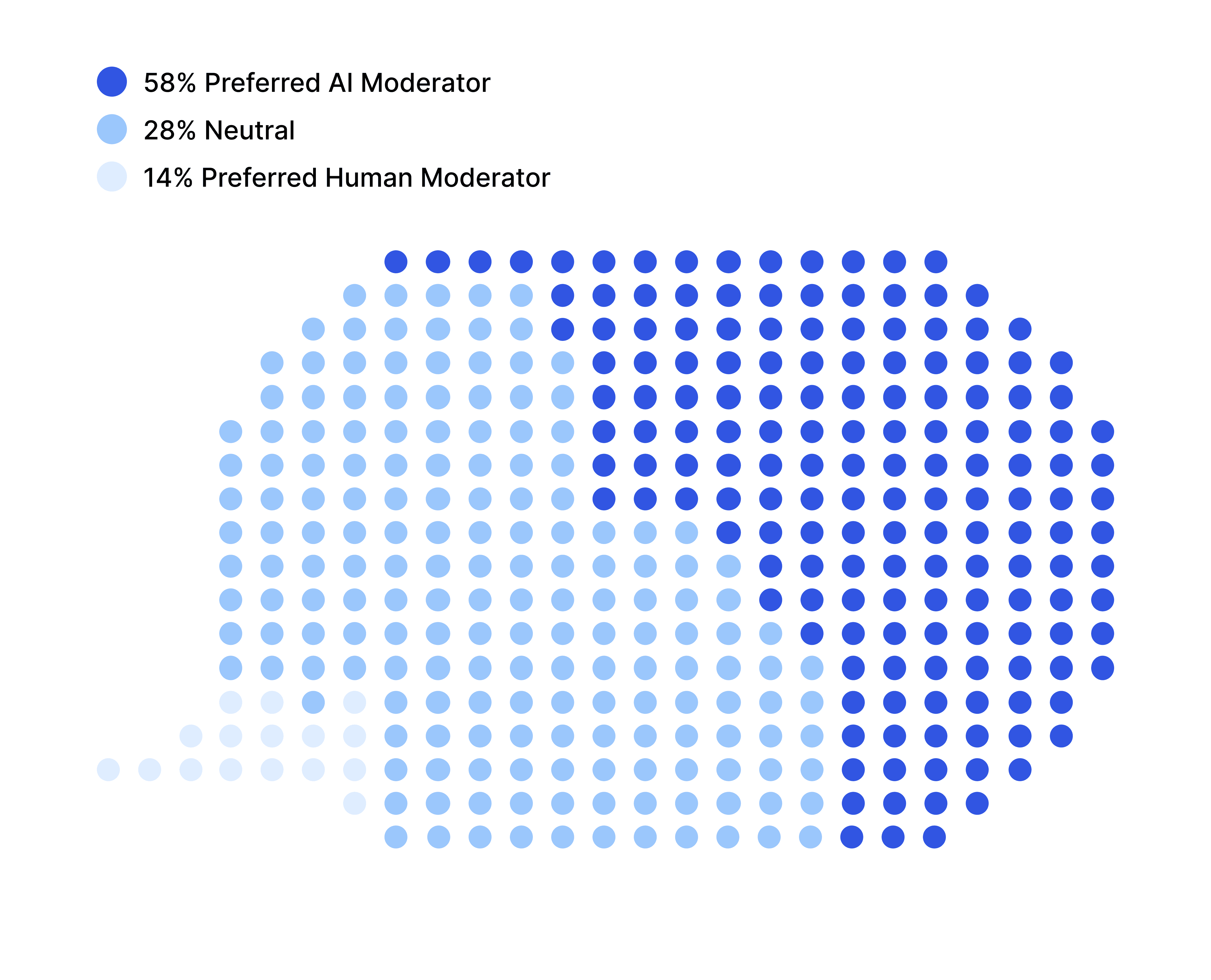

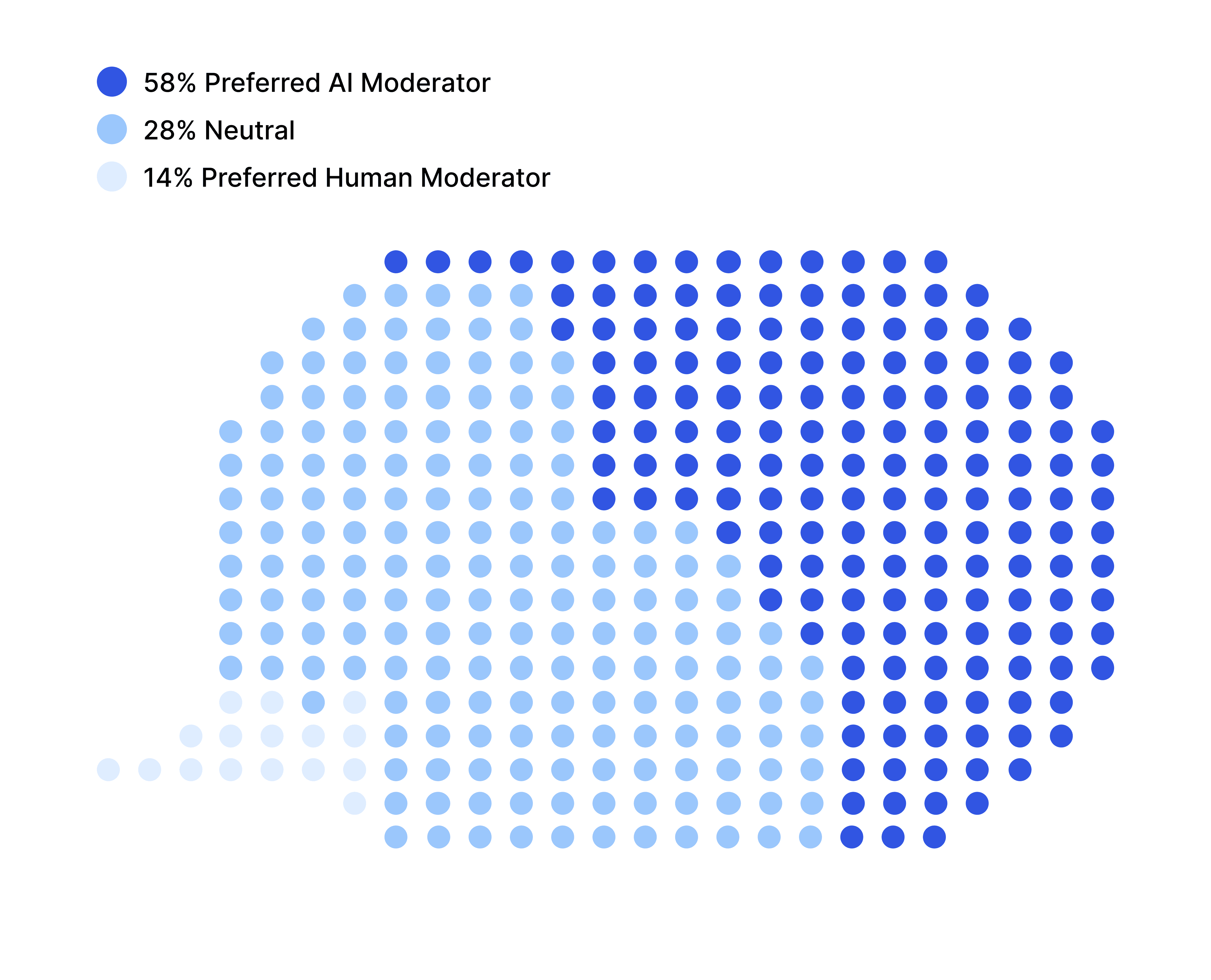

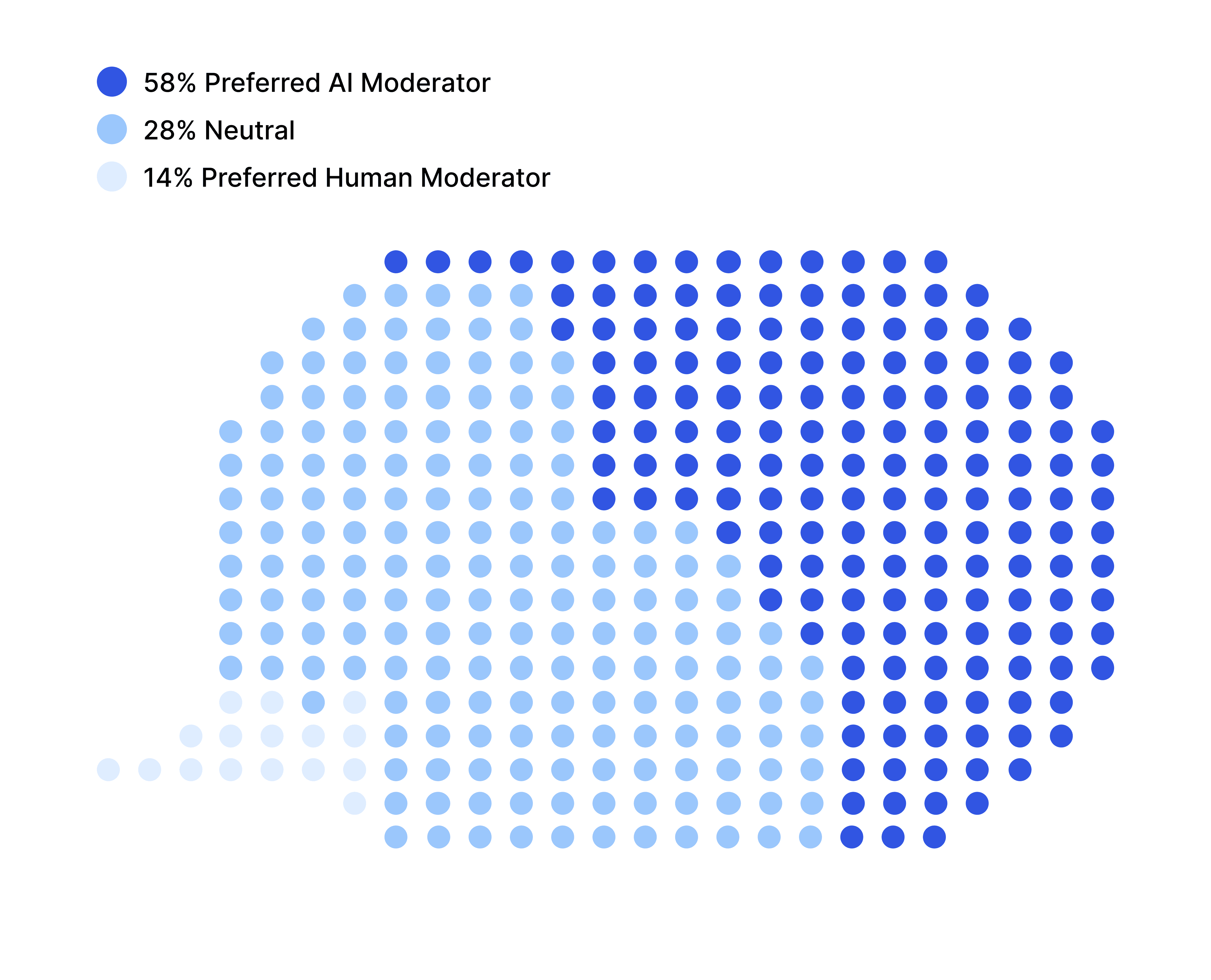

Topic sensitivity drives preference. People gravitate toward AI for private and polarizing topics. Political and religious discussions? 58% prefer AI moderation. Personal finances? Strong AI preference. But for everyday product feedback or shopping habits? Moderation format genuinely doesn't matter. Importantly, while some topics showed less preference for AI compared to others, no topics showed participants preferring humans over AI in our concept test.

Different comfort drivers for each format. AI comfort stems from reduced interpersonal judgment and social pressure (60%), structured pacing (34%), and convenience/control (30%). Human comfort comes from warmth, empathy, and rapport (68%), plus skilled probing that helps participants articulate complex thoughts.

An exceptionally high percentage feel less judged with AI. 32% of participants explicitly stated they feel less judged with AI moderation. That's nearly a third of all participants experiencing fundamentally greater psychological safety. This comfort translates directly into more candid, valuable responses.

Scheduling flexibility drives preference for AI moderation. 30% participants preferred the ability to schedule at their convenience which comes with AI moderation. Being able to participate on your own time, in your own space, reduces anxiety. You're not rushing to make an appointment or sitting in an unfamiliar room with a stranger.

We spend most of our lives performing for other people. We adjust what we say based on who's listening. We soften opinions that might be unpopular. And we hide the parts of ourselves that feel too vulnerable or too different.

Sociologist Erving Goffman called this "the presentation of self" - the idea that everyday life is essentially a performance for other people. The way we dress is like a costume. Our mannerisms are choreography. The words we choose are scripted lines.

For anyone trying to understand what people really think, whether you're doing market research, UX studies, or customer interviews, this performance is a massive problem. You're not getting real insights when someone is busy managing impressions.

So, we ran a study with 50 people who had done both human-moderated and AI-moderated research sessions.

We wanted to know: when do people drop the mask? When do they feel comfortable enough to tell the truth?

The findings surprised us.

"Scheduled sessions at specific times and locations are much more difficult, especially if I have to travel somewhere, then I have to take time off of work. Being able to do it from my home just makes it easier for me to participate, and I hope that that helps researchers too because they get more data."

Participant #16

Topic Deep Dive: When Format Matters Most

Political and Religious Views: The Strongest Pull Towards AI

The numbers here were overwhelming. 58% of participants preferred AI moderation for discussing political and religious views.

The psychology is straightforward. People worry about micro-expressions. They notice tone changes. They pick up on subtle signals that might indicate disagreement or judgment. With political topics more polarized than ever, that worry intensifies.

One participant put it simply:

“Political and religious topics are such hot, divisive issues. I would be afraid of having a moderator who might be on the opposite side treating me differently or possibly starting an argument. AIs are not going to do that.”

Participant #22

This matters for anyone trying to understand how political beliefs influence consumer behavior, product preferences, or brand perception. Human moderators, no matter how well trained, carry visible identities that participants will read and react to. AI removes that variable entirely.

Personal and Sensitive Topics: Where AI Creates Safety

Mental health discussions showed a 40% preference for AI versus 32% for human moderators, with the remainder neutral. This reflects a mixed response. Some participants preferred the anonymity and non-judgmental AI moderation when it came to discussions surrounding mental health issues. Others leaned towards human moderation where they could talk to someone who can immediately empathize and relate.

"I would be more open to AI because, like I said earlier, I'm not looking at a human. I'm not seeing their reaction. You know, you're being very vulnerable in these situations. So it's best just to talk about it and go to the next thing, you know, without really having to deal with the personal aspect of it."

Participant #48

Personal finances followed a similar pattern. There's a particular discomfort around money that stems from how we tie financial status to self-worth. Discussing debt, spending habits, or financial struggles with another person triggers that discomfort. With AI, it disappears.

Sexual and intimate topics also favored AI. The pattern holds: anything that carries social stigma or feels personally vulnerable, people prefer discussing with AI.

Where Humans Moderators Matter

Human moderators maintain clear advantages for specific situations:

Complex medical discussions - People want expert knowledge and the ability to ask clarifying questions about health concerns. They need to feel heard and understood when discussing physical symptoms or treatment experiences.

Specialized topics requiring deep expertise - When participants need guidance navigating technical or specialized subjects, human moderators can provide context and explanations that help generate richer responses.

Nuanced emotional experiences - Situations requiring genuine empathy and the ability to read between the lines benefit from human moderation. Humans excel at creating psychological safety through connection, offering in-the-moment support, and helping participants feel truly heard during vulnerable moments.

The Numbers Behind the Shift

The key insight isn't that AI is better or worse than humans. It's that different research goals call for different approaches.

Why This Matters: The Experience-Data Connection

There's a direct line from participant experience to data quality. Here's how it works:

When participants feel safe and unjudged, they tell the truth. When they tell the truth, you get better data. When you get better data, you make better decisions.

It sounds simple, but it's powerful. The whole point of doing research is to understand what people actually think, feel, and do—not what they think they should say to look good in front of another person.

AI moderation doesn't just make research more efficient or scalable. It fundamentally changes what people are willing to share.

A Practical Framework Choosing Your Approach

If you're designing research and wondering which moderation approach to use, here's a practical guide:

Choose AI Moderation For:

Politically charged topics

Mental health discussions

Stigmatized behaviors or issues

Personal finance topics

Sexual or intimate topics

Any research where social judgment might inhibit honesty

Large-scale studies across many participants

Global research across different languages and cultures

Choose Human Moderation For:

Complex medical discussions

Situations needing empathetic responses

When building deep rapport is essential

Nuanced emotional experiences that need human understanding

Consider a Hybrid Approach:

Start with AI moderation to gather baseline data without judgment. Then bring in human moderators for follow-up depth on specific areas that need expert knowledge or empathetic exploration.

Several participants in our study suggested this themselves—they saw value in combining AI's judgment-free initial gathering with human insight for deeper analysis.

The Bigger Picture

This research points to a shift in how we should think about AI in research and communication more broadly.

The question isn't "Will AI replace humans?"

The question is " What new possibilities does AI unlock?”

For sensitive topics, AI creates a judgment-free zone that most people can't achieve with another human, no matter how empathetic or well-trained that human is. That's valuable.

For everyday topics, AI offers efficiency and scale without sacrificing quality, since participants don't care about moderation format when there's no social risk involved. That's useful.

For complex topics requiring expertise and empathy, humans still excel. That's worth remembering.

The future probably isn't all-AI or all-human. It's matching the right approach to the right situation, understanding what each format does well, and being honest about the limitations of both.

What this study makes clear is that AI moderation isn't just a cost-saving measure or a way to speed up research. For certain topics, especially the ones that matter most for understanding authentic human behavior, it's increasingly becoming the better way to get people to tell you what they actually think.

Ready to unlock deeper insights?

Whether you need to understand political viewpoints, explore sensitive customer experiences, or gather honest feedback at scale, Listen Labs' AI-moderated research platform helps you get the truth behind the performance.

Our AI moderators create a judgment-free environment that makes authentic disclosure possible, while our platform gives you the tools to analyze and act on those insights.

Book a 15-minute walkthrough to learn more.

Note: All participants provided explicit consent for their videos, quotes, and interview screenshots to be used for the purpose of this blog post.

Key Takeaways

Both moderation styles work remarkably well. General comfort is high in both modes and essentially identical: 92% of participants report top comfort levels for human sessions and 92% for AI sessions. This baseline parity reveals something important. The future isn't about replacing one with the other, but understanding when each approach shines.

Topic sensitivity drives preference. People gravitate toward AI for private and polarizing topics. Political and religious discussions? 58% prefer AI moderation. Personal finances? Strong AI preference. But for everyday product feedback or shopping habits? Moderation format genuinely doesn't matter. Importantly, while some topics showed less preference for AI compared to others, no topics showed participants preferring humans over AI in our concept test.

Different comfort drivers for each format. AI comfort stems from reduced interpersonal judgment and social pressure (60%), structured pacing (34%), and convenience/control (30%). Human comfort comes from warmth, empathy, and rapport (68%), plus skilled probing that helps participants articulate complex thoughts.

An exceptionally high percentage feel less judged with AI. 32% of participants explicitly stated they feel less judged with AI moderation. That's nearly a third of all participants experiencing fundamentally greater psychological safety. This comfort translates directly into more candid, valuable responses.

Scheduling flexibility drives preference for AI moderation. 30% participants preferred the ability to schedule at their convenience which comes with AI moderation. Being able to participate on your own time, in your own space, reduces anxiety. You're not rushing to make an appointment or sitting in an unfamiliar room with a stranger.

We spend most of our lives performing for other people. We adjust what we say based on who's listening. We soften opinions that might be unpopular. And we hide the parts of ourselves that feel too vulnerable or too different.

Sociologist Erving Goffman called this "the presentation of self" - the idea that everyday life is essentially a performance for other people. The way we dress is like a costume. Our mannerisms are choreography. The words we choose are scripted lines.

For anyone trying to understand what people really think, whether you're doing market research, UX studies, or customer interviews, this performance is a massive problem. You're not getting real insights when someone is busy managing impressions.

So, we ran a study with 50 people who had done both human-moderated and AI-moderated research sessions.

We wanted to know: when do people drop the mask? When do they feel comfortable enough to tell the truth?

The findings surprised us.

"Scheduled sessions at specific times and locations are much more difficult, especially if I have to travel somewhere, then I have to take time off of work. Being able to do it from my home just makes it easier for me to participate, and I hope that that helps researchers too because they get more data."

Participant #16

Topic Deep Dive: When Format Matters Most

Political and Religious Views: The Strongest Pull Towards AI

The numbers here were overwhelming. 58% of participants preferred AI moderation for discussing political and religious views.

The psychology is straightforward. People worry about micro-expressions. They notice tone changes. They pick up on subtle signals that might indicate disagreement or judgment. With political topics more polarized than ever, that worry intensifies.

One participant put it simply:

“Political and religious topics are such hot, divisive issues. I would be afraid of having a moderator who might be on the opposite side treating me differently or possibly starting an argument. AIs are not going to do that.”

Participant #22

This matters for anyone trying to understand how political beliefs influence consumer behavior, product preferences, or brand perception. Human moderators, no matter how well trained, carry visible identities that participants will read and react to. AI removes that variable entirely.

Personal and Sensitive Topics: Where AI Creates Safety

Mental health discussions showed a 40% preference for AI versus 32% for human moderators, with the remainder neutral. This reflects a mixed response. Some participants preferred the anonymity and non-judgmental AI moderation when it came to discussions surrounding mental health issues. Others leaned towards human moderation where they could talk to someone who can immediately empathize and relate.

"I would be more open to AI because, like I said earlier, I'm not looking at a human. I'm not seeing their reaction. You know, you're being very vulnerable in these situations. So it's best just to talk about it and go to the next thing, you know, without really having to deal with the personal aspect of it."

Participant #48

Personal finances followed a similar pattern. There's a particular discomfort around money that stems from how we tie financial status to self-worth. Discussing debt, spending habits, or financial struggles with another person triggers that discomfort. With AI, it disappears.

Sexual and intimate topics also favored AI. The pattern holds: anything that carries social stigma or feels personally vulnerable, people prefer discussing with AI.

Where Humans Moderators Matter

Human moderators maintain clear advantages for specific situations:

Complex medical discussions - People want expert knowledge and the ability to ask clarifying questions about health concerns. They need to feel heard and understood when discussing physical symptoms or treatment experiences.

Specialized topics requiring deep expertise - When participants need guidance navigating technical or specialized subjects, human moderators can provide context and explanations that help generate richer responses.

Nuanced emotional experiences - Situations requiring genuine empathy and the ability to read between the lines benefit from human moderation. Humans excel at creating psychological safety through connection, offering in-the-moment support, and helping participants feel truly heard during vulnerable moments.

The Numbers Behind the Shift

The key insight isn't that AI is better or worse than humans. It's that different research goals call for different approaches.

Why This Matters: The Experience-Data Connection

There's a direct line from participant experience to data quality. Here's how it works:

When participants feel safe and unjudged, they tell the truth. When they tell the truth, you get better data. When you get better data, you make better decisions.

It sounds simple, but it's powerful. The whole point of doing research is to understand what people actually think, feel, and do—not what they think they should say to look good in front of another person.

AI moderation doesn't just make research more efficient or scalable. It fundamentally changes what people are willing to share.

A Practical Framework Choosing Your Approach

If you're designing research and wondering which moderation approach to use, here's a practical guide:

Choose AI Moderation For:

Politically charged topics

Mental health discussions

Stigmatized behaviors or issues

Personal finance topics

Sexual or intimate topics

Any research where social judgment might inhibit honesty

Large-scale studies across many participants

Global research across different languages and cultures

Choose Human Moderation For:

Complex medical discussions

Situations needing empathetic responses

When building deep rapport is essential

Nuanced emotional experiences that need human understanding

Consider a Hybrid Approach:

Start with AI moderation to gather baseline data without judgment. Then bring in human moderators for follow-up depth on specific areas that need expert knowledge or empathetic exploration.

Several participants in our study suggested this themselves—they saw value in combining AI's judgment-free initial gathering with human insight for deeper analysis.

The Bigger Picture

This research points to a shift in how we should think about AI in research and communication more broadly.

The question isn't "Will AI replace humans?"

The question is " What new possibilities does AI unlock?”

For sensitive topics, AI creates a judgment-free zone that most people can't achieve with another human, no matter how empathetic or well-trained that human is. That's valuable.

For everyday topics, AI offers efficiency and scale without sacrificing quality, since participants don't care about moderation format when there's no social risk involved. That's useful.

For complex topics requiring expertise and empathy, humans still excel. That's worth remembering.

The future probably isn't all-AI or all-human. It's matching the right approach to the right situation, understanding what each format does well, and being honest about the limitations of both.

What this study makes clear is that AI moderation isn't just a cost-saving measure or a way to speed up research. For certain topics, especially the ones that matter most for understanding authentic human behavior, it's increasingly becoming the better way to get people to tell you what they actually think.

Ready to unlock deeper insights?

Whether you need to understand political viewpoints, explore sensitive customer experiences, or gather honest feedback at scale, Listen Labs' AI-moderated research platform helps you get the truth behind the performance.

Our AI moderators create a judgment-free environment that makes authentic disclosure possible, while our platform gives you the tools to analyze and act on those insights.

Book a 15-minute walkthrough to learn more.

Note: All participants provided explicit consent for their videos, quotes, and interview screenshots to be used for the purpose of this blog post.

Key Takeaways

Both moderation styles work remarkably well. General comfort is high in both modes and essentially identical: 92% of participants report top comfort levels for human sessions and 92% for AI sessions. This baseline parity reveals something important. The future isn't about replacing one with the other, but understanding when each approach shines.

Topic sensitivity drives preference. People gravitate toward AI for private and polarizing topics. Political and religious discussions? 58% prefer AI moderation. Personal finances? Strong AI preference. But for everyday product feedback or shopping habits? Moderation format genuinely doesn't matter. Importantly, while some topics showed less preference for AI compared to others, no topics showed participants preferring humans over AI in our concept test.

Different comfort drivers for each format. AI comfort stems from reduced interpersonal judgment and social pressure (60%), structured pacing (34%), and convenience/control (30%). Human comfort comes from warmth, empathy, and rapport (68%), plus skilled probing that helps participants articulate complex thoughts.

An exceptionally high percentage feel less judged with AI. 32% of participants explicitly stated they feel less judged with AI moderation. That's nearly a third of all participants experiencing fundamentally greater psychological safety. This comfort translates directly into more candid, valuable responses.

Scheduling flexibility drives preference for AI moderation. 30% participants preferred the ability to schedule at their convenience which comes with AI moderation. Being able to participate on your own time, in your own space, reduces anxiety. You're not rushing to make an appointment or sitting in an unfamiliar room with a stranger.

We spend most of our lives performing for other people. We adjust what we say based on who's listening. We soften opinions that might be unpopular. And we hide the parts of ourselves that feel too vulnerable or too different.

Sociologist Erving Goffman called this "the presentation of self" - the idea that everyday life is essentially a performance for other people. The way we dress is like a costume. Our mannerisms are choreography. The words we choose are scripted lines.

For anyone trying to understand what people really think, whether you're doing market research, UX studies, or customer interviews, this performance is a massive problem. You're not getting real insights when someone is busy managing impressions.

So, we ran a study with 50 people who had done both human-moderated and AI-moderated research sessions.

We wanted to know: when do people drop the mask? When do they feel comfortable enough to tell the truth?

The findings surprised us.

"Scheduled sessions at specific times and locations are much more difficult, especially if I have to travel somewhere, then I have to take time off of work. Being able to do it from my home just makes it easier for me to participate, and I hope that that helps researchers too because they get more data."

Participant #16

Topic Deep Dive: When Format Matters Most

Political and Religious Views: The Strongest Pull Towards AI

The numbers here were overwhelming. 58% of participants preferred AI moderation for discussing political and religious views.

The psychology is straightforward. People worry about micro-expressions. They notice tone changes. They pick up on subtle signals that might indicate disagreement or judgment. With political topics more polarized than ever, that worry intensifies.

One participant put it simply:

“Political and religious topics are such hot, divisive issues. I would be afraid of having a moderator who might be on the opposite side treating me differently or possibly starting an argument. AIs are not going to do that.”

Participant #22

This matters for anyone trying to understand how political beliefs influence consumer behavior, product preferences, or brand perception. Human moderators, no matter how well trained, carry visible identities that participants will read and react to. AI removes that variable entirely.

Personal and Sensitive Topics: Where AI Creates Safety

Mental health discussions showed a 40% preference for AI versus 32% for human moderators, with the remainder neutral. This reflects a mixed response. Some participants preferred the anonymity and non-judgmental AI moderation when it came to discussions surrounding mental health issues. Others leaned towards human moderation where they could talk to someone who can immediately empathize and relate.

"I would be more open to AI because, like I said earlier, I'm not looking at a human. I'm not seeing their reaction. You know, you're being very vulnerable in these situations. So it's best just to talk about it and go to the next thing, you know, without really having to deal with the personal aspect of it."

Participant #48

Personal finances followed a similar pattern. There's a particular discomfort around money that stems from how we tie financial status to self-worth. Discussing debt, spending habits, or financial struggles with another person triggers that discomfort. With AI, it disappears.

Sexual and intimate topics also favored AI. The pattern holds: anything that carries social stigma or feels personally vulnerable, people prefer discussing with AI.

Where Humans Moderators Matter

Human moderators maintain clear advantages for specific situations:

Complex medical discussions - People want expert knowledge and the ability to ask clarifying questions about health concerns. They need to feel heard and understood when discussing physical symptoms or treatment experiences.

Specialized topics requiring deep expertise - When participants need guidance navigating technical or specialized subjects, human moderators can provide context and explanations that help generate richer responses.

Nuanced emotional experiences - Situations requiring genuine empathy and the ability to read between the lines benefit from human moderation. Humans excel at creating psychological safety through connection, offering in-the-moment support, and helping participants feel truly heard during vulnerable moments.

The Numbers Behind the Shift

The key insight isn't that AI is better or worse than humans. It's that different research goals call for different approaches.

Why This Matters: The Experience-Data Connection

There's a direct line from participant experience to data quality. Here's how it works:

When participants feel safe and unjudged, they tell the truth. When they tell the truth, you get better data. When you get better data, you make better decisions.

It sounds simple, but it's powerful. The whole point of doing research is to understand what people actually think, feel, and do—not what they think they should say to look good in front of another person.

AI moderation doesn't just make research more efficient or scalable. It fundamentally changes what people are willing to share.

A Practical Framework Choosing Your Approach

If you're designing research and wondering which moderation approach to use, here's a practical guide:

Choose AI Moderation For:

Politically charged topics

Mental health discussions

Stigmatized behaviors or issues

Personal finance topics

Sexual or intimate topics

Any research where social judgment might inhibit honesty

Large-scale studies across many participants

Global research across different languages and cultures

Choose Human Moderation For:

Complex medical discussions

Situations needing empathetic responses

When building deep rapport is essential

Nuanced emotional experiences that need human understanding

Consider a Hybrid Approach:

Start with AI moderation to gather baseline data without judgment. Then bring in human moderators for follow-up depth on specific areas that need expert knowledge or empathetic exploration.

Several participants in our study suggested this themselves—they saw value in combining AI's judgment-free initial gathering with human insight for deeper analysis.

The Bigger Picture

This research points to a shift in how we should think about AI in research and communication more broadly.

The question isn't "Will AI replace humans?"

The question is " What new possibilities does AI unlock?”

For sensitive topics, AI creates a judgment-free zone that most people can't achieve with another human, no matter how empathetic or well-trained that human is. That's valuable.

For everyday topics, AI offers efficiency and scale without sacrificing quality, since participants don't care about moderation format when there's no social risk involved. That's useful.

For complex topics requiring expertise and empathy, humans still excel. That's worth remembering.

The future probably isn't all-AI or all-human. It's matching the right approach to the right situation, understanding what each format does well, and being honest about the limitations of both.

What this study makes clear is that AI moderation isn't just a cost-saving measure or a way to speed up research. For certain topics, especially the ones that matter most for understanding authentic human behavior, it's increasingly becoming the better way to get people to tell you what they actually think.

Ready to unlock deeper insights?

Whether you need to understand political viewpoints, explore sensitive customer experiences, or gather honest feedback at scale, Listen Labs' AI-moderated research platform helps you get the truth behind the performance.

Our AI moderators create a judgment-free environment that makes authentic disclosure possible, while our platform gives you the tools to analyze and act on those insights.

Book a 15-minute walkthrough to learn more.

Note: All participants provided explicit consent for their videos, quotes, and interview screenshots to be used for the purpose of this blog post.